October 23, 2025 | Sydney, Australia

Consulting giant Deloitte has agreed to repay $440,000 AUD to the Australian federal government after a report generated partly by artificial intelligence (AI) was found to contain fabricated citations and inaccurate references.

The report, commissioned in 2024 by the Department of Employment and Workplace Relations (DEWR), aimed to assess the Targeted Compliance Framework, a program that oversees welfare payment compliance. However, reviewers discovered that several parts of the report contained non-existent academic references and a misattributed Federal Court judgment — all of which were traced back to AI-generated “hallucinations.”

Discovery of the Errors

The issue was first revealed by the Australian Financial Review (AFR) in August 2024. Investigators found that the report had relied heavily on Microsoft Azure OpenAI GPT-4o, a large language model licensed through DEWR for use by consulting partners.

Dr. Christopher Rudge, a legal academic from the University of Sydney, examined the report and found multiple instances where academic sources were invented. “This is not just a failure of technology; it’s a failure of human oversight,” he explained. “Consultants and government departments must ensure that any AI-generated material is thoroughly verified before it is presented as evidence or analysis.”

Following the exposure, Deloitte released an updated version of the report with corrections and added a disclosure appendix acknowledging the use of AI in drafting certain sections.

Deloitte’s Response and Partial Refund

In a statement, Deloitte confirmed it would refund the final installment of the project payment, valued at $440,000 AUD. The company emphasized that the AI-generated errors did not affect the core findings or recommendations but acknowledged that proper validation procedures had not been followed.

A Deloitte spokesperson said:

We take full responsibility for the oversight and are implementing new review mechanisms for AI-assisted work. Our commitment to accuracy and ethical standards remains unchanged.

The refund, although only partial, has been interpreted as a significant step toward restoring public trust in the firm’s government consulting work.

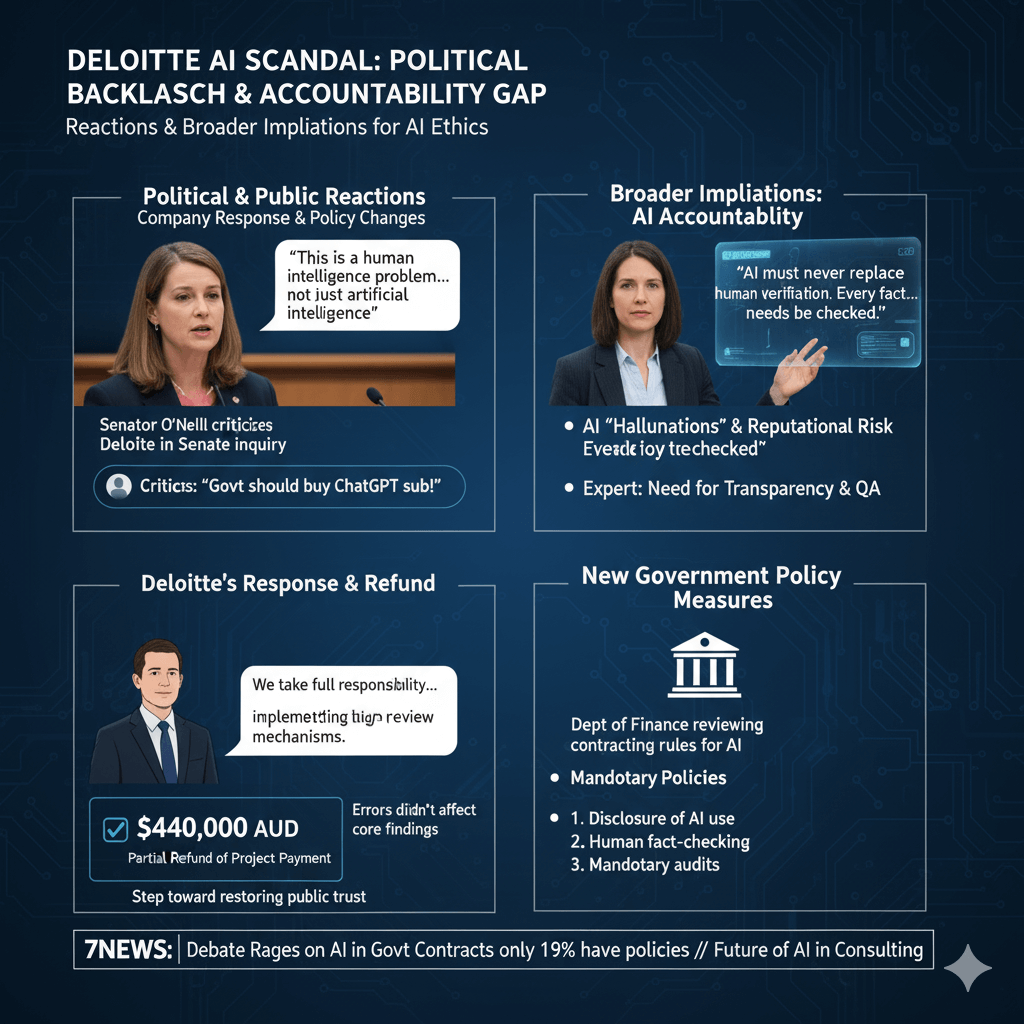

Political and Public Reactions

The case quickly attracted political attention. During a Senate inquiry in Canberra, Labor Senator Deborah O’Neill criticized Deloitte’s use of AI without proper supervision. “This is a human intelligence problem, not just artificial intelligence,” she said, adding that government agencies might “get better value from a ChatGPT subscription than from multi-million-dollar consultancy contracts.”

O’Neill’s comments sparked a broader debate about the reliance of public institutions on major consulting firms. Critics argue that many agencies are outsourcing critical thinking to private corporations that now use AI tools without sufficient transparency or human oversight.

Broader Implications for AI Accountability

Experts say the Deloitte case underscores the growing accountability gap in professional services that use AI systems. While tools like GPT-4 can significantly reduce research and drafting time, they are also prone to “hallucinations” — confident but false statements generated by language models.

Dr. Fiona Marshall, an AI governance researcher at Monash University, said:

AI can assist in data analysis and drafting, but it must never replace human verification. Every fact, reference, and citation needs to be checked. Otherwise, you risk both reputational damage and legal exposure.

She added that the Deloitte incident is “an opportunity for the industry to establish best practices for AI transparency and quality assurance.”

Government Review and New Policy Measures

The Department of Finance has since confirmed that it is reviewing its contracting rules for projects involving artificial intelligence. New policies are expected to require:

- Disclosure of AI use in all government-funded reports.

- Human fact-checking and validation for every AI-generated citation.

- Mandatory audits of AI-assisted deliverables.

These steps aim to prevent a repeat of the Deloitte incident and to ensure that AI tools are used responsibly in policy or compliance work.

Case Study: AI Use in Australian Consulting Firms

According to a 2025 report by the Office of the Australian Information Commissioner (OAIC), nearly 64% of consulting firms in Australia have experimented with AI tools for writing, analysis, or research. Yet only 19% have established internal policies for verifying AI outputs.

The Deloitte incident has quickly become a case study in professional ethics and risk management. Analysts predict that it could reshape how consulting firms integrate AI into their operations — particularly in regulated industries and government contracts.

A senior analyst at Tech Policy Review noted, “This situation illustrates a turning point. AI adoption is accelerating, but quality control frameworks have not kept up. The next few years will determine whether AI remains a trusted business tool or becomes a reputational hazard.”

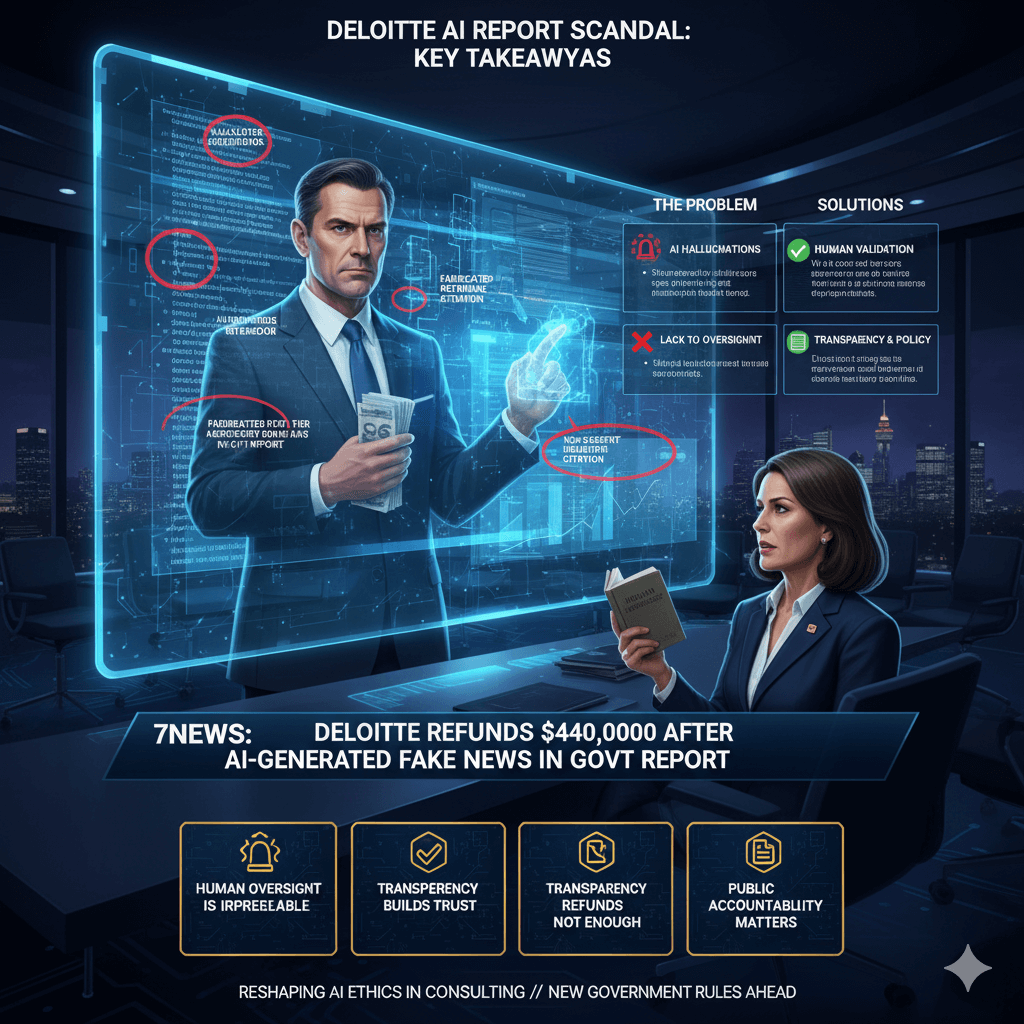

Lessons and Takeaways

Human oversight is irreplaceable.

Even the most advanced AI models require human review to verify data integrity and factual accuracy.

Transparency builds trust.

Disclosing the use of AI — and explaining its role in analysis — helps maintain credibility with clients and regulators.

Refunds are not enough.

Financial remediation cannot fully repair trust; lasting change comes from improved governance and training.

Public accountability matters.

As governments increasingly rely on private consultants, AI governance must be enforced through policy, not just promises.